Monte Carlo simulations use random sampling and statistical analysis to solve complex problems with uncertain parameters, offering a powerful tool for modeling risk and uncertainty in various fields.

Overview of Monte Carlo Simulation

Monte Carlo simulation is a numerical technique that uses random sampling and statistical analysis to solve complex problems with uncertainty. It relies on generating random numbers to mimic real-world scenarios, allowing for the estimation of probabilities and outcomes. This method is widely used in finance, engineering, and science to model risk, optimize systems, and forecast future events. By repeating simulations many times, it provides insights into potential outcomes and their likelihoods, enhancing decision-making processes.

Importance of Random Sampling in Monte Carlo Methods

Random sampling is the cornerstone of Monte Carlo methods, enabling the simulation of real-world uncertainties. By generating random variables, it allows for the representation of uncertain parameters, such as material properties or market fluctuations. This process facilitates the estimation of probabilities and outcomes, providing insights into complex systems. Random sampling ensures that simulations accurately reflect real-world variability, making Monte Carlo methods invaluable for decision-making in uncertain environments across various fields.

What is the Monte Carlo Method?

The Monte Carlo method is a computational technique using random sampling and statistical analysis to solve deterministic or probabilistic problems through repeated simulations.

Definition and Basic Principles

The Monte Carlo method is a computational technique that relies on random sampling and statistical analysis to solve mathematical problems. It involves generating random samples from a probability distribution to estimate numerical results. This method is particularly useful for solving problems with uncertainty or randomness, where analytical solutions are difficult to obtain. By simulating numerous random outcomes, it provides insights into the behavior of complex systems, making it a versatile tool for both deterministic and probabilistic problem-solving.

Historical Context and Development

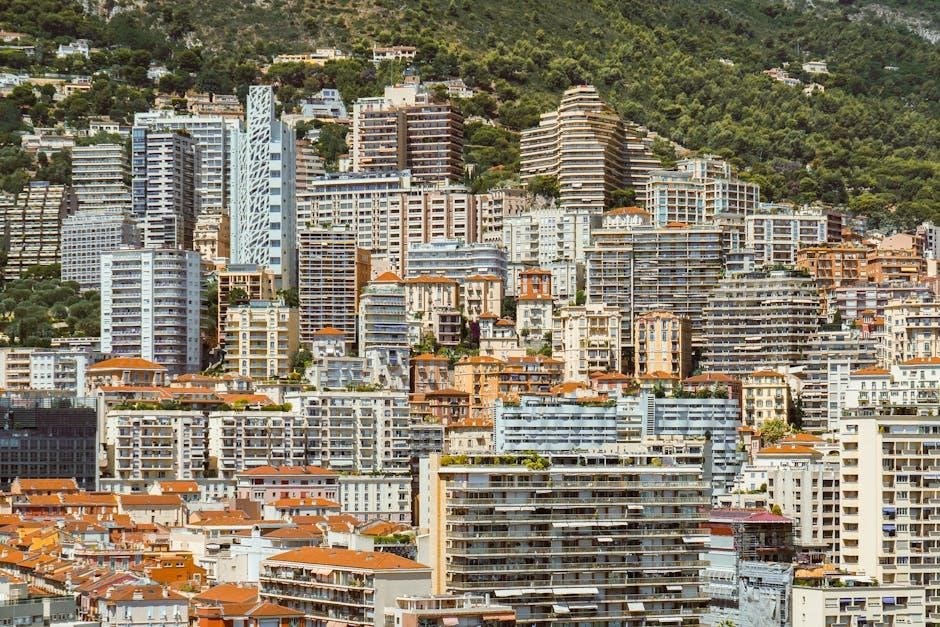

The Monte Carlo method traces its roots to the mid-20th century, emerging from the need to solve complex probabilistic problems in physics. Named after the Casino de Monte-Carlo, it symbolizes the role of chance in simulations. Initially used in nuclear physics during the Manhattan Project, the method gained prominence with advancements in computing. Pioneers like Stanislaw Ulam and John von Neumann laid its foundation, leveraging random sampling to estimate solutions. Over time, it evolved into a versatile tool across disciplines, driven by computational advancements and the need for probabilistic modeling.

Applications of Monte Carlo Simulations

Monte Carlo simulations are widely applied in finance, engineering, and scientific research to model uncertainty, optimize systems, and make data-driven decisions under complex conditions.

Applications in Finance and Risk Analysis

Monte Carlo simulations are extensively used in finance for portfolio optimization, derivatives pricing, and risk assessment. They enable the evaluation of complex financial models under uncertainty, such as market volatility and credit risk. By generating multiple scenarios, these simulations help quantify potential outcomes, aiding in informed decision-making and stress testing. This method is particularly valuable for analyzing non-linear instruments and assessing the impact of variable market conditions on financial portfolios and investments.

Applications in Engineering and Scientific Research

Monte Carlo simulations are widely applied in engineering and scientific research to model complex systems and analyze uncertainty. In engineering, they optimize designs, assess reliability, and predict material behavior under varying conditions. In scientific research, these simulations are used to study stochastic processes, such as particle physics and climate modeling. They also aid in solving inverse problems and estimating parameters in experiments, enhancing accuracy and efficiency in research and development.

Random Number Generation in Monte Carlo Simulations

Monte Carlo simulations rely on pseudo-random number generators to produce uniform (0,1) numbers, which are then converted into specific distributions, ensuring reliable and consistent results.

Generation of Uniform and Non-Uniform Random Variables

Monte Carlo simulations begin with generating uniform random variables between 0 and 1, which serve as the foundation for creating non-uniform distributions. Techniques like inverse transform sampling and acceptance-rejection methods are commonly used to convert uniform variables into specific probability distributions. This process ensures that the generated random numbers align with the desired probability density functions (PDFs), enabling accurate and reliable simulation outcomes across various applications.

Role of Pseudo-Random Number Generators

Pseudo-random number generators (PRNGs) are essential in Monte Carlo simulations, producing sequences of numbers that mimic true randomness. These algorithms generate uniform random variables, which are then transformed into desired distributions. PRNGs are deterministic but designed to produce sequences with statistical properties akin to true randomness. Their reliability is crucial for the accuracy and reproducibility of Monte Carlo results, making them a cornerstone of modern computational simulations in diverse fields.

Variance Reduction Methods in Monte Carlo Simulations

Variance reduction methods enhance simulation efficiency by reducing the variability of results, enabling more accurate computations with fewer samples and improving overall computational feasibility.

Importance of Variance Reduction Techniques

Variance reduction techniques are essential for improving Monte Carlo simulation efficiency, as they minimize the variability of results, enabling more accurate and reliable outcomes with fewer samples. These methods are crucial for solving complex problems that would otherwise require excessive computational resources or be infeasible. Techniques like stratified sampling and antithetic variates help reduce computational intensity while maintaining accuracy, making them indispensable for practical applications in various fields.

Stratified Sampling and Antithetic Variates

Stratified sampling divides the population into subgroups to reduce variance by ensuring proportional representation, enhancing precision. Antithetic variates use paired samples with negative correlations, minimizing variability. Both methods improve Monte Carlo efficiency without bias, offering robust tools for complex simulations. They are widely applied in finance and engineering to optimize resource use and accuracy, making them cornerstone techniques in variance reduction strategies.

Probability Density Functions (PDFs) in Monte Carlo Methods

PDFs are essential in Monte Carlo simulations for modeling stochastic processes, enabling the generation of random variables with specific distributions. They form the backbone of probabilistic analyses.

Using PDFs for Sampling and Distribution

PDFs are fundamental in Monte Carlo methods for defining the distribution of random variables. They enable the conversion of uniform random numbers into specific distributions, such as normal or exponential, through methods like inverse transform sampling. This process ensures simulations accurately reflect real-world variability. By specifying the underlying probability distributions, PDFs allow for the analysis of uncertainty and stochastic processes, making them indispensable in Monte Carlo modeling and analysis.

Transforming Uniform Random Variables to Specific Distributions

Uniform random variables are transformed into specific distributions using techniques like inverse transform sampling. This method involves applying the inverse of a distribution’s CDF to uniform random numbers. For example, generating normal variables from uniform ones by applying the inverse normal CDF. This transformation is crucial for simulating real-world phenomena, enabling Monte Carlo methods to model complex systems accurately and efficiently across various fields such as finance, engineering, and scientific research.

Markov Chain Monte Carlo (MCMC) Methods

MCMC extends Monte Carlo methods for complex simulations, enabling Bayesian inference and analysis of probabilistic models. It addresses problems where direct computation is challenging.

Markov Chain Monte Carlo (MCMC) is a computational method for Bayesian inference, enabling the estimation of complex probabilistic models. Widely used in machine learning, statistics, and physics, MCMC generates samples from high-dimensional distributions, providing insights into uncertain parameters; Its applications span model fitting, predictive analytics, and decision-making under uncertainty, making it a cornerstone of modern computational statistics and data science.

Metropolis-Hastings Algorithm and Gibbs Sampling

The Metropolis-Hastings algorithm is a widely used MCMC method for sampling from complex probability distributions. It employs a proposal distribution to generate candidate states and accepts or rejects them based on the Metropolis criterion. Gibbs sampling, another MCMC technique, updates each variable sequentially while conditioning on the current values of others. Both algorithms are foundational in computational statistics, enabling efficient exploration of high-dimensional probability spaces and applications in Bayesian inference, machine learning, and statistical modeling.

Advantages and Limitations of Monte Carlo Simulations

Monte Carlo simulations offer flexibility and versatility in solving complex problems but require significant computational resources, balancing accuracy with efficiency in large-scale applications.

Flexibility and Versatility of the Method

Flexibility and Versatility of the Method

Monte Carlo simulations are highly adaptable, making them applicable across diverse fields like finance, engineering, and scientific research. Their flexibility allows modeling of both deterministic and probabilistic systems, handling uncertain parameters effectively. The method integrates seamlessly with probability density functions (PDFs) and can generate various distributions, from uniform to complex ones, offering a robust framework for analyzing complex phenomena and systems with varying degrees of uncertainty and randomness.

Computational Intensity and Accuracy Trade-offs

Monte Carlo simulations require significant computational resources due to the large number of random iterations needed for accurate results. While increased iterations improve precision, they also extend processing time and resource usage. Balancing computational intensity with desired accuracy is critical, as overly detailed simulations may not be practical for real-world applications, where time and resource constraints often limit the number of iterations feasible for a given problem.